M4L.RhythmVAE for Ableton Live—A Rhythm generation model for musicians

How shall we design an AI music generation system to enhance users’ creativity? Is it enough to use AI tools provided by big companies?

Background

As we see more and more AI-based music generation tools, we began to ask ourselves the following questions.

How much of the music is “made” by the tool, and how much is made by us, the artist and musician?

Will the use of AI tools created by large companies like Google and Amazon contribute to enchaning the creativity of users (artists), or will it lead to cultural uniformity?

In order to answer these questions, we aimed to build a music generation tool that users, ordinary musicians, can train AI models by themselves and use them for their own creation immediately. In particular, we focused on rhythm patterns and built a system that can learn MIDI data collected by the user on music production software (DAW).

Technology

As the deep learning model architecture, we chose Variational Autoencoder (VAE). In order to speed up the training process and enable training with a small amount of training data, the model was constructed using only feedforward layers. In addition, the latent vectors are two-dimensional in order to generate rhythms in real-time by inputting the latent vectors on our software UI.

It’s worth noting that this device is not meant be a general purpose rhythm generation system. We expect/encourage users to use unbalanced training data and hope it leads to interesting unexpected rhythm patterns.

VAE Training

Rhythm Generation with a trained model

Application

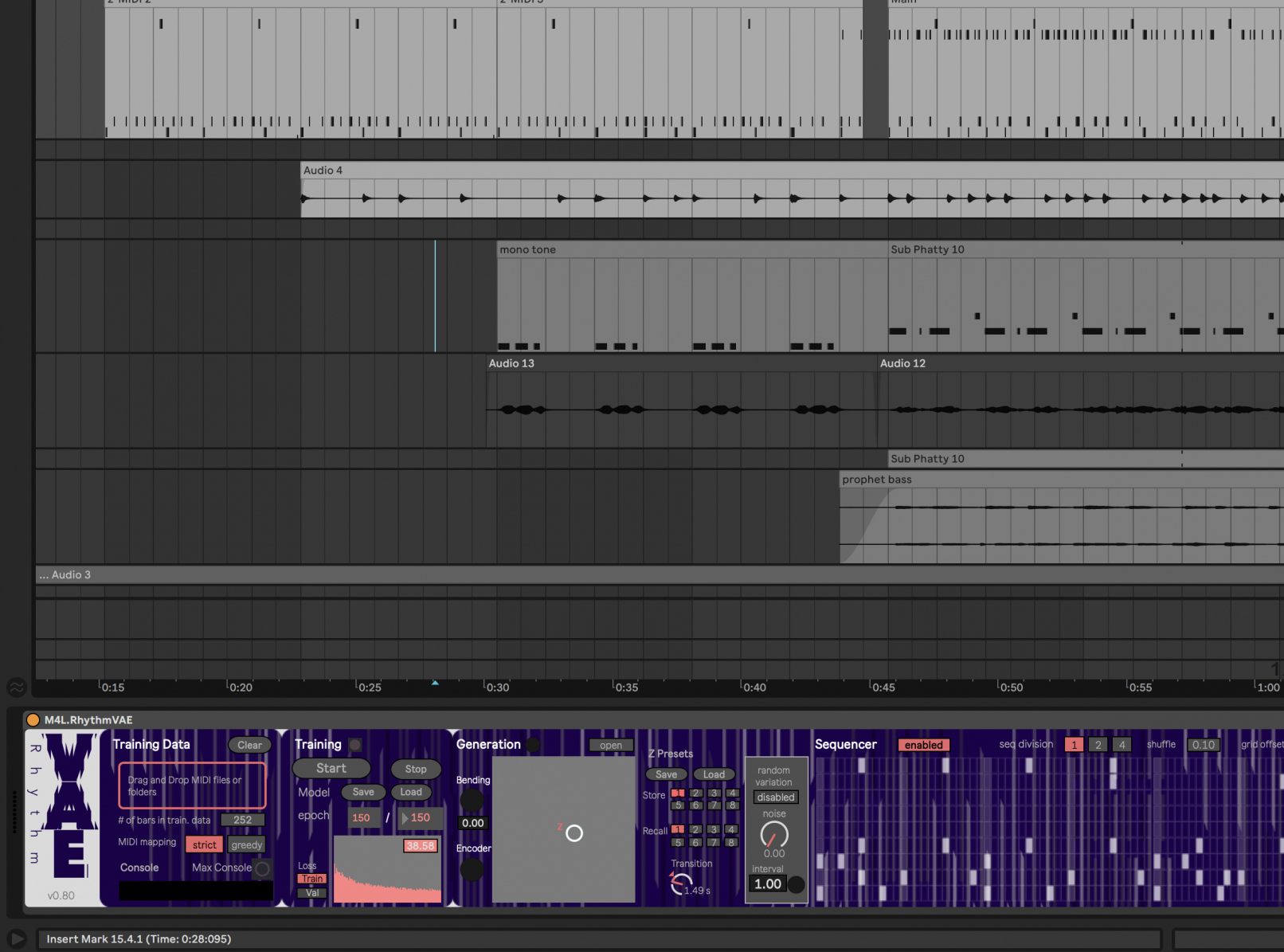

In this study, we selected Ableton Live as the target music production software. We have packaged all the training and generation mechanisms as a single Ableton Live Max for Live device.

To train a VAE model, users can simply drag & drop MIDI data containing rhythm patterns. Once trained, dynamically changing rhythms can be generated by feeding gradually changing latent vectors.

With this plugin device, users can train their own rhythm generation model and use it within their natural music production workflow. We are trying to democratize the use of AI in musical creative endeavors.